In this tutorial, you use Python 3 to create the simplest Python 'Hello World' application in Visual Studio Code. By using the Python extension, you make VS Code into a great lightweight Python IDE (which you may find a productive alternative to PyCharm).

This tutorial introduces you to VS Code as a Python environment, primarily how to edit, run, and debug code through the following tasks:

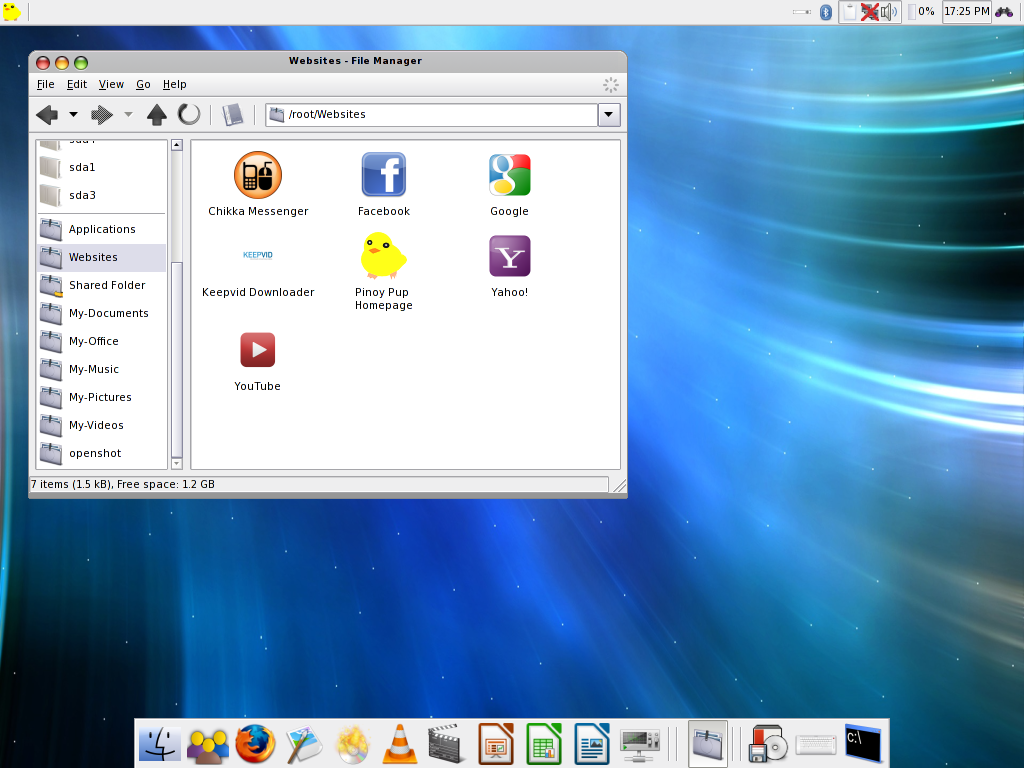

Zeal is an offline documentation browser for software developers. Download for Windows Download for Linux Get Dash for macOS. Linux Mint is a computer operating system designed to work on most modern systems, including typical x86 and x64 PCs. Linux Mint can be thought of as filling the same role as Microsoft's Windows, Apple's Mac OS, and the free BSD OS. Linux Mint is also designed to work in conjunction with. The goal of this project is to bring the look and feel of Mac OS X (latest being 10.5, Leopard) on.nix GTK based systems. This document will present the procedure to install Mac4Lin pack & tweak certain things to get that almost perfect Mac OS X like desktop.

- Write, run, and debug a Python 'Hello World' Application

- Learn how to install packages by creating Python virtual environments

- Write a simple Python script to plot figures within VS Code

This tutorial is not intended to teach you Python itself. Once you are familiar with the basics of VS Code, you can then follow any of the programming tutorials on python.org within the context of VS Code for an introduction to the language.

If you have any problems, feel free to file an issue for this tutorial in the VS Code documentation repository.

Note: You can use VS Code with Python 2 with this tutorial, but you need to make appropriate changes to the code, which are not covered here.

Prerequisites

To successfully complete this tutorial, you need to first setup your Python development environment. Specifically, this tutorial requires:

- VS Code

- VS Code Python extension

- Python 3

Install Visual Studio Code and the Python Extension

If you have not already done so, install VS Code.

Next, install the Python extension for VS Code from the Visual Studio Marketplace. For additional details on installing extensions, see Extension Marketplace. The Python extension is named Python and it's published by Microsoft.

Install a Python interpreter

Along with the Python extension, you need to install a Python interpreter. Which interpreter you use is dependent on your specific needs, but some guidance is provided below.

Windows

Install Python from python.org. You can typically use the Download Python button that appears first on the page to download the latest version.

Note: If you don't have admin access, an additional option for installing Python on Windows is to use the Microsoft Store. The Microsoft Store provides installs of Python 3.7 and Python 3.8. Be aware that you might have compatibility issues with some packages using this method.

For additional information about using Python on Windows, see Using Python on Windows at Python.org

macOS

The system install of Python on macOS is not supported. Instead, an installation through Homebrew is recommended. To install Python using Homebrew on macOS use brew install python3 at the Terminal prompt.

Note On macOS, make sure the location of your VS Code installation is included in your PATH environment variable. See these setup instructions for more information.

Linux

The built-in Python 3 installation on Linux works well, but to install other Python packages you must install pip with get-pip.py.

Other options

Data Science: If your primary purpose for using Python is Data Science, then you might consider a download from Anaconda. Anaconda provides not just a Python interpreter, but many useful libraries and tools for data science.

Windows Subsystem for Linux: If you are working on Windows and want a Linux environment for working with Python, the Windows Subsystem for Linux (WSL) is an option for you. If you choose this option, you'll also want to install the Remote - WSL extension. For more information about using WSL with VS Code, see VS Code Remote Development or try the Working in WSL tutorial, which will walk you through setting up WSL, installing Python, and creating a Hello World application running in WSL.

Verify the Python installation

To verify that you've installed Python successfully on your machine, run one of the following commands (depending on your operating system):

Linux/macOS: open a Terminal Window and type the following command:

Windows: open a command prompt and run the following command:

If the installation was successful, the output window should show the version of Python that you installed.

Note You can use the py -0 command in the VS Code integrated terminal to view the versions of python installed on your machine. The default interpreter is identified by an asterisk (*).

Start VS Code in a project (workspace) folder

Using a command prompt or terminal, create an empty folder called 'hello', navigate into it, and open VS Code (code) in that folder (.) by entering the following commands:

Note: If you're using an Anaconda distribution, be sure to use an Anaconda command prompt.

By starting VS Code in a folder, that folder becomes your 'workspace'. VS Code stores settings that are specific to that workspace in .vscode/settings.json, which are separate from user settings that are stored globally.

Alternately, you can run VS Code through the operating system UI, then use File > Open Folder to open the project folder.

Select a Python interpreter

Python is an interpreted language, and in order to run Python code and get Python IntelliSense, you must tell VS Code which interpreter to use.

From within VS Code, select a Python 3 interpreter by opening the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)), start typing the Python: Select Interpreter command to search, then select the command. You can also use the Select Python Environment option on the Status Bar if available (it may already show a selected interpreter, too):

The command presents a list of available interpreters that VS Code can find automatically, including virtual environments. If you don't see the desired interpreter, see Configuring Python environments.

Note: When using an Anaconda distribution, the correct interpreter should have the suffix ('base':conda), for example Python 3.7.3 64-bit ('base':conda).

Selecting an interpreter sets the python.pythonPath value in your workspace settings to the path of the interpreter. To see the setting, select File > Preferences > Settings (Code > Preferences > Settings on macOS), then select the Workspace Settings tab.

Note: If you select an interpreter without a workspace folder open, VS Code sets python.pythonPath in your user settings instead, which sets the default interpreter for VS Code in general. The user setting makes sure you always have a default interpreter for Python projects. The workspace settings lets you override the user setting.

Create a Python Hello World source code file

From the File Explorer toolbar, select the New File button on the hello folder:

Name the file hello.py, and it automatically opens in the editor:

By using the .py file extension, you tell VS Code to interpret this file as a Python program, so that it evaluates the contents with the Python extension and the selected interpreter.

Note: The File Explorer toolbar also allows you to create folders within your workspace to better organize your code. You can use the New folder button to quickly create a folder.

Now that you have a code file in your Workspace, enter the following source code in hello.py:

When you start typing print, notice how IntelliSense presents auto-completion options.

IntelliSense and auto-completions work for standard Python modules as well as other packages you've installed into the environment of the selected Python interpreter. It also provides completions for methods available on object types. For example, because the msg variable contains a string, IntelliSense provides string methods when you type msg.:

Feel free to experiment with IntelliSense some more, but then revert your changes so you have only the msg variable and the print call, and save the file (⌘S (Windows, Linux Ctrl+S)).

For full details on editing, formatting, and refactoring, see Editing code. The Python extension also has full support for Linting.

Run Hello World

It's simple to run hello.py with Python. Just click the Run Python File in Terminal play button in the top-right side of the editor.

The button opens a terminal panel in which your Python interpreter is automatically activated, then runs python3 hello.py (macOS/Linux) or python hello.py (Windows):

There are three other ways you can run Python code within VS Code:

Right-click anywhere in the editor window and select Run Python File in Terminal (which saves the file automatically):

Select one or more lines, then press Shift+Enter or right-click and select Run Selection/Line in Python Terminal. This command is convenient for testing just a part of a file.

From the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)), select the Python: Start REPL command to open a REPL terminal for the currently selected Python interpreter. In the REPL, you can then enter and run lines of code one at a time.

Configure and run the debugger

Let's now try debugging our simple Hello World program.

First, set a breakpoint on line 2 of hello.py by placing the cursor on the print call and pressing F9. Alternately, just click in the editor's left gutter, next to the line numbers. When you set a breakpoint, a red circle appears in the gutter.

Next, to initialize the debugger, press F5. Since this is your first time debugging this file, a configuration menu will open from the Command Palette allowing you to select the type of debug configuration you would like for the opened file.

Note: VS Code uses JSON files for all of its various configurations; launch.json is the standard name for a file containing debugging configurations.

These different configurations are fully explained in Debugging configurations; for now, just select Python File, which is the configuration that runs the current file shown in the editor using the currently selected Python interpreter.

The debugger will stop at the first line of the file breakpoint. The current line is indicated with a yellow arrow in the left margin. If you examine the Local variables window at this point, you will see now defined msg variable appears in the Local pane.

A debug toolbar appears along the top with the following commands from left to right: continue (F5), step over (F10), step into (F11), step out (⇧F11 (Windows, Linux Shift+F11)), restart (⇧⌘F5 (Windows, Linux Ctrl+Shift+F5)), and stop (⇧F5 (Windows, Linux Shift+F5)).

The Status Bar also changes color (orange in many themes) to indicate that you're in debug mode. The Python Debug Console also appears automatically in the lower right panel to show the commands being run, along with the program output.

To continue running the program, select the continue command on the debug toolbar (F5). The debugger runs the program to the end.

Tip Debugging information can also be seen by hovering over code, such as variables. In the case of msg, hovering over the variable will display the string Hello world in a box above the variable.

You can also work with variables in the Debug Console (If you don't see it, select Debug Console in the lower right area of VS Code, or select it from the ... menu.) Then try entering the following lines, one by one, at the > prompt at the bottom of the console:

Select the blue Continue button on the toolbar again (or press F5) to run the program to completion. 'Hello World' appears in the Python Debug Console if you switch back to it, and VS Code exits debugging mode once the program is complete.

If you restart the debugger, the debugger again stops on the first breakpoint.

To stop running a program before it's complete, use the red square stop button on the debug toolbar (⇧F5 (Windows, Linux Shift+F5)), or use the Run > Stop debugging menu command.

For full details, see Debugging configurations, which includes notes on how to use a specific Python interpreter for debugging.

Tip: Use Logpoints instead of print statements: Developers often litter source code with print statements to quickly inspect variables without necessarily stepping through each line of code in a debugger. In VS Code, you can instead use Logpoints. A Logpoint is like a breakpoint except that it logs a message to the console and doesn't stop the program. For more information, see Logpoints in the main VS Code debugging article.

Install and use packages

Let's now run an example that's a little more interesting. In Python, packages are how you obtain any number of useful code libraries, typically from PyPI. For this example, you use the matplotlib and numpy packages to create a graphical plot as is commonly done with data science. (Note that matplotlib cannot show graphs when running in the Windows Subsystem for Linux as it lacks the necessary UI support.)

Return to the Explorer view (the top-most icon on the left side, which shows files), create a new file called standardplot.py, and paste in the following source code:

Tip: If you enter the above code by hand, you may find that auto-completions change the names after the as keywords when you press Enter at the end of a line. To avoid this, type a space, then Enter.

Next, try running the file in the debugger using the 'Python: Current file' configuration as described in the last section.

Unless you're using an Anaconda distribution or have previously installed the matplotlib package, you should see the message, 'ModuleNotFoundError: No module named 'matplotlib'. Such a message indicates that the required package isn't available in your system.

To install the matplotlib package (which also installs numpy as a dependency), stop the debugger and use the Command Palette to run Terminal: Create New Integrated Terminal (⌃⇧` (Windows, Linux Ctrl+Shift+`)). This command opens a command prompt for your selected interpreter.

A best practice among Python developers is to avoid installing packages into a global interpreter environment. You instead use a project-specific virtual environment that contains a copy of a global interpreter. Once you activate that environment, any packages you then install are isolated from other environments. Such isolation reduces many complications that can arise from conflicting package versions. To create a virtual environment and install the required packages, enter the following commands as appropriate for your operating system:

Note: For additional information about virtual environments, see Environments.

Create and activate the virtual environment

Note: When you create a new virtual environment, you should be prompted by VS Code to set it as the default for your workspace folder. If selected, the environment will automatically be activated when you open a new terminal.

For windows

If the activate command generates the message 'Activate.ps1 is not digitally signed. You cannot run this script on the current system.', then you need to temporarily change the PowerShell execution policy to allow scripts to run (see About Execution Policies in the PowerShell documentation):

For macOS/Linux

Select your new environment by using the Python: Select Interpreter command from the Command Palette.

Install the packages

Rerun the program now (with or without the debugger) and after a few moments a plot window appears with the output:

Once you are finished, type

deactivatein the terminal window to deactivate the virtual environment.

For additional examples of creating and activating a virtual environment and installing packages, see the Django tutorial and the Flask tutorial.

Next steps

You can configure VS Code to use any Python environment you have installed, including virtual and conda environments. You can also use a separate environment for debugging. For full details, see Environments.

To learn more about the Python language, follow any of the programming tutorials listed on python.org within the context of VS Code.

To learn to build web apps with the Django and Flask frameworks, see the following tutorials:

There is then much more to explore with Python in Visual Studio Code:

- Editing code - Learn about autocomplete, IntelliSense, formatting, and refactoring for Python.

- Linting - Enable, configure, and apply a variety of Python linters.

- Debugging - Learn to debug Python both locally and remotely.

- Testing - Configure test environments and discover, run, and debug tests.

- Settings reference - Explore the full range of Python-related settings in VS Code.

Now that you have the basic pieces in place, it is time to build your application. This section covers some of the more common issues that you may encounter in bringing your UNIX application to OS X. These issues apply largely without regard to what type of development you are doing.

Using GNU Autoconf, Automake, and Autoheader

If you are bringing a preexisting command-line utility to OS X that uses GNU autoconf, automake, or autoheader, you will probably find that it configures itself without modification (though the resulting configuration may be insufficient). Just run configure and make as you would on any other UNIX-based system.

If running the configure script fails because it doesn’t understand the architecture, try replacing the project’s config.sub and config.guess files with those available in /usr/share/automake-1.6. If you are distributing applications that use autoconf, you should include an up-to-date version of config.sub and config.guess so that OS X users don’t have to do anything extra to build your project.

If that still fails, you may need to run /usr/bin/autoconf on your project to rebuild the configure script before it works. OS X includes autoconf in the BSD tools package. Beyond these basics, if the project does not build, you may need to modify your makefile using some of the tips provided in the following sections. After you do that, more extensive refactoring may be required.

Some programs may use autoconf macros that are not supported by the version of autoconf that shipped with OS X. Because autoconf changes periodically, you may actually need to get a new version of autoconf if you need to build the very latest sources for some projects. In general, most projects include a prebuilt configure script with releases, so this is usually not necessary unless you are building an open source project using sources obtained from CVS or from a daily source snapshot.

However, if you find it necessary to upgrade autoconf, you can get a current version from http://www.gnu.org/software/autoconf/. Note that autoconf, by default, installs in /usr/local/, so you may need to modify your PATH environment variable to use the newly updated version. Do not attempt to replace the version installed in /usr/.

For additional information about using the GNU autotoolset, see http://autotoolset.sourceforge.net/tutorial.html and the manual pages autoconf, automake, and autoheader.

Compiling for Multiple CPU Architectures

Because the Macintosh platform includes more than one processor family, it is often important to compile software for multiple processor architectures. For example, libraries should generally be compiled as universal binaries even if you are exclusively targeting an Intel-based Macintosh computer, as your library may be used by a PowerPC binary running under Rosetta. For executables, if you plan to distribute compiled versions, you should generally create universal binaries for convenience.

When compiling programs for architectures other than your default host architecture, such as compiling for a ppc64 or Intel-based Macintosh target on a PowerPC-based build host, there are a few common problems that you may run into. Most of these problems result from one of the following mistakes:

Assuming that the build host is architecturally similar to the target architecture and will thus be capable of executing intermediate build products

Trying to determine target-processor-specific information at configuration time (by compiling and executing small code snippets) rather than at compile time (using macro tests) or execution time (for example, by using conditional byte swap functions)

Whenever cross-compiling occurs, extra care must be taken to ensure that the target architecture is detected correctly. This is particularly an issue when generating a binary containing object code for more than one architecture.

In many cases, binaries containing object code for more than one architecture can be generated simply by running the normal configuration script, then overriding the architecture flags at compile time.

For example, you might run

followed by

to generate a universal binary (for Intel-based and PowerPC-based Macintosh computers). To generate a 4-way universal binary that includes 64-bit versions, you would add -arch ppc64 and -arch x86_64 to the CFLAGS and LDFLAGS.

Note: If you are using an older version of gcc and your makefile passes LDFLAGS to gcc instead of passing them directly to ld, you may need to specify the linker flags as -Wl,-syslibroot,/Developer/SDKs/MacOSX10.4u.sdk. This tells the compiler to pass the unknown flags to the linker without interpreting them. Do not pass the LDFLAGS in this form to ld, however; ld does not currently support the -Wl syntax.

If you need to support an older version of gcc and your makefile passes LDFLAGS to both gcc and ld, you may need to modify it to pass this argument in different forms, depending on which tool is being used. Fortunately, these cases are rare; most makefiles either pass LDFLAGS to gcc or ld, but not both. Newer versions of gcc support -syslibroot directly.

If your makefile does not explicitly pass the contents of LDFLAGS to gcc or ld, they may still be passed to one or the other by a make rule. If you are using the standard built-in make rules, the contents of LDFLAGS are passed directly to ld. If in doubt, assume that it is passed to ld. If you get an invalid flag error, you guessed incorrectly.

If your makefile uses gcc to run the linker instead of invoking it directly, you must specify a list of target architectures to link when working with universal binary object (.o) files even if you are not using all of the architectures of the object file. If you don't, you will not create a universal binary, and you may also get a linker error. For more information about 64-bit executables, see 64-Bit Transition Guide.

However, applications that make configuration-time decisions about the size of data structures will generally fail to build correctly in such an environment (since those sizes may need to be different depending on whether the compiler is executing a ppc pass, a ppc64 pass, or an i386 pass). When this happens, the tool must be configured and compiled for each architecture as separate executables, then glued together manually using lipo.

In rare cases, software not written with cross-compilation in mind will make configure-time decisions by executing code on the build host. In these cases, you will have to manually alter either the configuration scripts or the resulting headers to be appropriate for the actual target architecture (rather than the build architecture). In some cases, this can be solved by telling the configure script that you are cross-compiling using the --host, --build, and --target flags. However, this may simply result in defaults for the target platform being inserted, which doesn’t really solve the problem.

The best fix is to replace configure-time detection of endianness, data type sizes, and so on with compile-time or run-time detection. For example, instead of testing the architecture for endianness to obtain consistent byte order in a file, you should do one of the following:

Use C preprocessor macros like

__BIG_ENDIAN__and__LITTLE_ENDIAN__to test endianness at compile time.Use functions like

htonl,htons,ntohl, andntohsto guarantee a big-endian representation on any architecture.Extract individual bytes by bitwise masking and shifting (for example,

lowbyte=word & 0xff; nextbyte = (word >> 8) & 0xff;and so on).

Similarly, instead of performing elaborate tests to determine whether to use int or long for a 4-byte piece of data, you should simply use a standard sized type such as uint32_t.

Note: Not all script execution is incompatible with cross-compiling. A number of open source tools (GTK, for example) use script execution to determine the presence or absence of libraries, determine their versions and locations, and so on.

In those cases, you must be certain that the info script associated with the universal binary installation (or the target platform installation if you are strictly cross-compiling) is the one that executes during the configuration process, rather than the info script associated with an installation specific to your host architecture.

There are a few other caveats when working with universal binaries:

The library archive utility,

ar, cannot work with libraries containing code for more than one architecture (or single-architecture libraries generated withlipo) after ranlib has added a table of contents to them. Thus, if you need to add additional object files to a library, you must keep a separate copy without a TOC.The

-Mswitch to gcc (to output dependency information) is not supported when multiple architectures are specified on the command line. Depending on your makefile, this may require substantial changes to your makefile rules. For autoconf-based configure scripts, the flag--disable-dependency-trackingshould solve this problem.For projects using automake, it may be necessary to run automake with the

-iflag to disable dependency checks or putno-dependenciesin theAUTOMAKE_OPTIONSvariable in each Makefile.am file.If you run into problems building a universal binary for an open source tool, the first thing you should do is to get the latest version of the source code. This does two things:

Ensures that the version of autoconf and automake used to generate the configuration scripts is reasonably current, reducing the likelihood of build failures, execution failures, backwards or forwards compatibility problems, and other idiosyncratic or downright broken behavior.

Reduces the likelihood of building a version of an open source tool that contains known security holes or other serious bugs.

Older versions of autoconf do not handle the case where

--target,--host, and--buildare not handled gracefully. Different versions also behave differently when you specify only one or two of these flags. Thus, you should always specify all three of these options if you are running an autoconf-generated configure script with intent to cross-compile.Some earlier versions of autoconf handle cross-compiling poorly. If your tool contains a configure script generated by an early autoconf, you may be able to significantly improve things by replacing some of the

config.*files (andconfig.guessin particular) with updated copies from the version of autoconf that comes with OS X.This will not always work, however, in which case it may be necessary to actually regenerate the configure script by running autoconf. To do this, simply change into the root directory of the project and run

/usr/bin/autoconf. It will automatically detect and use theconfigure.infile and use it to generate a new configure script. If you get warnings, you should first try a web search for the error message, as someone else may have already run into the problem (possibly on a different tool) and found a solution.If you get errors about missing

AC_macros, you may need to download a copy of libraries on which your tool depends and copy their.m4autoconf configuration files into/usr/share/autoconf. Alternately, you can add the macros to the fileacinclude.m4in your project’s main directory and autoconf should automatically pick up those macros.You may, in some cases, need to rerun

automakeand/orautoheaderif your tool uses them. Be prepared to run into missingAM_andAH_macros if you do, however. Because of the added risk of missing macros, this should generally only be done if running autoconf by itself does not correct a build problem.Important: Be sure to make a backup copy of the original scripts, headers, and other generated files (or, ideally, the entire project directory) before running autoheader or automake.

Different makefiles and configure scripts handle command-line overrides in different ways. The most consistent way to force these overrides is to specify them prior to the command. For example:

should generally result in the above being added to

CFLAGSduring compilation. However, this behavior is not completely consistent across makefiles from different projects.

For additional information about autoconf, automake, and autoheader, you can view the autoconf documentation at http://www.gnu.org/software/autoconf/manual/index.html.

For additional information on compiler flags for Intel-based Macintosh computers, modifying code to support little-endian CPUs, and other porting concerns, you should read Universal Binary Programming Guidelines, Second Edition, available from the ADC Reference Library.

Cross-Compiling a Self-Bootstrapping Tool

Probably the most difficult situation you may experience is that of a self-bootstrapping tool—a tool that uses a (possibly stripped-down) copy of itself to either compile the final version of itself or to construct support files or libraries. Some examples include TeX, Perl, and gcc.

Ideally, you should be able to build the executable as a universal binary in a single build pass. If that is possible, everything “just works”, since the universal binary can execute on the host. However, this is not always possible. If you have to cross-compile and glue the pieces together with lipo, this obviously will not work.

If the build system is written well, the tool will bootstrap itself by building a version compiled for the host, then use that to build the pieces for the target, and finally compile a version of the binary for the target. In that case, you should not have to do anything special for the build to succeed.

In some cases, however, it is not possible to simultaneously compile for multiple architectures and the build system wasn’t designed for cross-compiling. In those cases, the recommended solution is to pre-install a version of the tool for the host architecture, then modify the build scripts to rename the target’s intermediate copy of the tool and copy the host’s copy in place of that intermediate build product (for example, mv miniperl miniperl-target; cp /usr/bin/perl miniperl).

By doing this, later parts of the build script will execute the version of the tool built for the host architecture. Assuming there are no architecture dependencies in the dependent tools or support files, they should build correctly using the host’s copy of the tool. Once the dependent build is complete, you should swap back in the original target copy in the final build phase. The trick is in figuring out when to have each copy in place.

Conditional Compilation on OS X

You will sometimes find it necessary to use conditional compilation to make your code behave differently depending on whether certain functionality is available.

Older code sometimes used conditional statements like #ifdef __MACH__ or #ifdef __APPLE__ to try to determine whether it was being compiled on OS X or not. While this seems appealing as a quick way of getting ported, it ultimately causes more work in the long run. For example, if you make the assumption that a particular function does not exist in OS X and conditionally replace it with your own version that implements the same functionality as a wrapper around a different API, your application may no longer compile or may be less efficient if Apple adds that function in a later version.

Apart from displaying or using the name of the OS for some reason (which you can more portably obtain from the uname API), code should never behave differently on OS X merely because it is running on OS X. Code should behave differently because OS X behaves differently in some way—offering an additional feature, not offering functionality specific to another operating system, and so on. Thus, for maximum portability and maintainability, you should focus on that difference and make the conditional compilation dependent upon detecting the difference rather than dependent upon the OS itself. This not only makes it easier to maintain your code as OS X evolves, but also makes it easier to port your code to other platforms that may support different but overlapping feature sets.

The most common reasons you might want to use such conditional statements are attempts to detect differences in:

processor architecture

byte order

file system case sensitivity

other file system properties

compiler, linker, or toolchain differences

availability of application frameworks

availability of header files

support for a function or feature

Instead it is better to figure out why your code needs to behave differently in OS X, then use conditional compilation techniques that are appropriate for the actual root cause.

The misuse of these conditionals often causes problems. For example, if you assume that certain frameworks are present if those macros are defined, you might get compile failures when building a 64-bit executable. If you instead test for the availability of the framework, you might be able to fall back on an alternative mechanism such as X11, or you might skip building the graphical portions of the application entirely.

For example, OS X provides preprocessor macros to determine the CPU architecture and byte order. These include:

__i386__—Intel (32-bit)__x86_64__—Intel (64-bit)__ppc__—PowerPC (32-bit)__ppc64__—PowerPC (64-bit)__BIG_ENDIAN__—Big endian CPU__LITTLE_ENDIAN__—Little endian CPU__LP64__—The LP64 (64-bit) data model

In addition, using tools like autoconf, you can create arbitrary conditional compilation on nearly any practical feature of the installation, from testing to see if a file exists to seeing if you can successfully compile a piece of code.

For example, if a portion of your project requires a particular application framework, you can compile a small test program whose main function calls a function in that framework. If the test program compiles and links successfully, the application framework is present for the specified CPU architecture.

You can even use this technique to determine whether to include workarounds for known bugs in Apple or third-party libraries and frameworks, either by testing the versions of those frameworks or by providing a test case that reproduces the bug and checking the results.

For example, in OS X, poll does not support device files such as /dev/tty. If you just avoid poll if your code is running on OS X, you are making two assumptions that you should not make:

You are assuming that what you are doing will always be unsupported. OS X is an evolving operating system that adds new features on a regular basis, so this is not necessarily a valid assumption.

You are assuming that OS X is the only platform that does not support using

pollon device files. While this is probably true for most device files, not all device files supportpollin all operating systems, so this is also not necessarily a valid assumption.

A better solution is to use a configuration-time test that tries to use poll on a device file, and if the call fails, disables the use of poll. If using poll provides some significant advantage, it may be better to perform a runtime test early in your application execution, then use poll only if that test succeeds. By testing for support at runtime, your application can use the poll API if is supported by a particular version of any operating system, falling back gracefully if it is not supported.

A good rule is to always test for the most specific thing that is guaranteed to meet your requirements. If you need a framework, test for the framework. If you need a library, test for the library. If you need a particular compiler version, test the compiler version. And so on. By doing this, you increase your chances that your application will continue to work correctly without modification in the future.

Choosing a Compiler

OS X ships two compilers and their corresponding toolchains. The default compiler is based on GCC 4.2. In addition, a compiler based on GCC 4.0 is provided. Older versions of Xcode also provide prior versions. Compiling for 64-bit PowerPC and Intel-based Macintosh computers is only supported in version 4.0 and later. Compiling 64-bit kernel extensions is only supported in version 4.2 and later.

Always try to compile your software using GCC 4 because future toolchains will be based on GCC version 4 or later. However, because GCC 4 is a relatively new toolchain, you may find bugs that prevent compiling certain programs.

Use of the legacy GCC 2.95.2-based toolchain is strongly discouraged unless you have to maintain compatibility with OS X version 10.1.

If you run into a problem that looks like a compiler bug, try using a different version of GCC. You can run a different version by setting the CC environment variable in your Makefile. For example, CC=gcc-4.0 chooses GCC 4.0. In Xcode, you can change the compiler setting on a per-project basis or a per-file basis by selecting a different compiler version in the appropriate build settings inspector.

Setting Compiler Flags

When building your projects in OS X, simply supplying or modifying the compiler flags of a few key options is all you need to do to port most programs. These are usually specified by either the CFLAGS or LDFLAGS variable in your makefile, depending on which part of the compiler chain interprets the flags. Unless otherwise specified, you should add these flags to CFLAGS if needed.

Note: The 64-bit toolchain in OS X v10.4 and later has additional compiler flags (and a few deprecated flags). These are described in more detail in 64-Bit Transition Guide.

Some common flags include:

-flat_namespace (in LDFLAGS)Changes from a two-level to a single-level (flat) namespace. By default, OS X builds libraries and applications with a two-level namespace where references to dynamic libraries are resolved to a definition in a specific dynamic library when the image is built. Use of this flag is generally discouraged, but in some cases, is unavoidable. For more information, see Understanding Two-Level Namespaces.

-bundle (in LDFLAGS)Produces a Mach-O bundle format file, which is used for creating loadable plug-ins. See the ld man page for more discussion of this flag.

-bundle_loader executable (in LDFLAGS)Specifies which executable will load a plug-in. Undefined symbols in that bundle are checked against the specified executable as if it were another dynamic library, thus ensuring that the bundle will actually be loadable without missing symbols.

-framework framework (in LDFLAGS)Links the executable being built against the listed framework. For example, you might add -framework vecLib to include support for vector math.

-mmacosx-version-min versionSpecifies the version of OS X you are targeting. You must target your compile for the oldest version of OS X on which you want to run the executable. In addition, you should install and use the cross-development SDK for that version of OS X. For more information, see SDK Compatibility Guide.

Note: OS X also uses this value to determine the UNIX conformance behavior of some APIs. For more information, read Unix 03 Conformance Release Notes.

Note: OS X uses a single-pass linker. Make sure that you put your framework and library options after the object(.o) files. To get more information about the Apple linker read the manual page for ld.

More extensive discussion for the compiler in general can be found at http://developer.apple.com/releasenotes/DeveloperTools/.

Understanding Two-Level Namespaces

By default, OS X builds libraries and applications with a two-level namespace. In a two-level namespace environment, when you compile a new dynamic library, any references that the library might make to other dynamic libraries are resolved to a definition in those specific dynamic libraries.

The two-level namespace design has many advantages for Carbon applications. However, it can cause problems for many traditional UNIX applications if they were designed to work in a flat namespace environment.

For example, suppose one library, call it libfoo, uses another library, libbar, for its implementation of the function barIt. Now suppose an application wants to override the use of libbar with a compressed version, called libzbar. Since libfoo was linked against libbar at compile time, this is not possible without recompiling libfoo.

To allow the application to override references made by libfoo to libbar, you would use the flag -flat_namespace. The ld man page has a more detailed discussion of this flag.

If you are writing libraries from scratch, it may be worth considering the two-level namespace issue in your design. If you expect that someone may want to override your library’s use of another library, you might have an initializer routine that takes pointers to the second library as its arguments, and then use those pointers for the calls instead of calling the second library directly.

Alternately, you might use a plug-in architecture in which the calls to the outside library are made from a plug-in that could be easily replaced with a different plug-in for a different outside library. See Dynamic Libraries and Plug-ins for more information.

For the most part, however, unless you are designing a library from scratch, it is not practical to avoid using -flat_namespace if you need to override a library’s references to another library.

If you are compiling an executable (as opposed to a library), you can also use -force_flat_namespace to tell dyld to use a flat namespace when loading any dynamic libraries and bundles loaded by the binary. This is usually not necessary, however.

Executable Format

The only executable format that the OS X kernel understands is the Mach-O format. Some bridging tools are provided for classic Macintosh executable formats, but Mach-O is the native format. It is very different from the commonly used Executable and Linking Format (ELF). For more information on Mach-O, see OS X ABI Mach-O File Format Reference.

Dynamic Libraries and Plug-ins

Dynamic libraries and plug-ins behave differently in OS X than in other operating systems. This section explains some of those differences.

Using Dynamic Libraries at Link Time

When linking an executable, OS X treats dynamic libraries just like libraries in any other UNIX-based or UNIX-like operating system. If you have a library called libmytool.a, libmytool.dylib, or libmytool.so, for example, all you have to do is this:

As a general rule, you should avoid creating static libraries (.a) except as a temporary side product of building an application. You must run ranlib on any archive file before you attempt to link against it.

Using Dynamic Libraries Programmatically

OS X makes heavy use of dynamically linked code. Unlike other binary formats such as ELF and xcoff, Mach-O treats plug-ins differently than it treats shared libraries.

The preferred mechanism for dynamic loading of shared code, beginning in OS X v10.4 and later, is the dlopen family of functions. These are described in the man page for dlopen. The ld and dyld man pages give more specific details of the dynamic linker’s implementation.

Note: By default, the names of dynamic libraries in OS X end in .dylib instead of .so. You should be aware of this when writing code to load shared code in OS X.

Libraries that you are familiar with from other UNIX-based systems might not be in the same location in OS X. This is because OS X has a single dynamically loadable framework, libSystem, that contains much of the core system functionality. This single module provides the standard C runtime environment, input/output routines, math libraries, and most of the normal functionality required by command-line applications and network services.

The libSystem library also includes functions that you would normally expect to find in libc and libm, RPC services, and a name resolver. Because libSystem is automatically linked into your application, you do not need to explicitly add it to the compiler’s link line. For your convenience, many of these libraries exist as symbolic links to libSystem, so while explicitly linking against -lm (for example) is not needed, it will not cause an error.

To learn more about how to use dynamic libraries, see Dynamic Library Programming Topics.

Compiling Dynamic Libraries and Plugins

For the most part, you can treat dynamic libraries and plugins the same way as on any other platform if you use GNU libtool. This tool is installed in OS X as glibtool to avoid a name conflict with NeXT libtool. For more information, see the manual page for glibtool.

You can also create dynamic libraries and plugins manually if desired. As mentioned in Using Dynamic Libraries Programmatically, dynamic libraries and plugins are not the same thing in OS X. Thus, you must pass different flags when you create them.

To create dynamic libraries in OS X, pass the -dynamiclib flag.

To create plugins, pass the -bundle flag.

Because plugins can be tailored to a particular application, the OS X compiler provides the ability to check these plugins for loadability at compile time. To take advantage of this feature, use the -bundle_loader flag. For example:

If the compiler finds symbol requests in the plugin that cannot be resolved in the application, you will get a link error. This means that you must use the -l flag to link against any libraries that the plugin requires as though you were building a complete executable.

Important: OS X does not support the concept of weak linking as it is found in systems like Linux. If you override one symbol, you must override all of the symbols in that object file.

To learn more about how to create and use dynamic libraries, see Dynamic Library Programming Topics.

Bundles

In the OS X file system, some directories store executable code and the software resources related to that code in one discrete package. These packages, known as bundles, come in two varieties: application bundles and frameworks.

There are two basic types of bundles that you should be familiar with during the basic porting process: application bundles and frameworks. In particular, you should be aware of how to use frameworks, since you may need to link against the contents of a framework when porting your application.

Application Bundles

Application bundles are special directories that appear in the Finder as a single entity. Having only one item allows a user to double-click it to get the application with all of its supporting resources. If you are building Mac apps, you should make application bundles. Xcode builds them by default if you select one of the application project types. More information on application bundles is available in Bundles vs. Installers and in Mac Technology Overview.

Frameworks

A framework is a type of bundle that packages a shared library with the resources that the library requires. Depending on the library, this bundle could include header files, images, and reference documentation. If you are trying to maintain cross-platform compatibility, you may not want to create your own frameworks, but you should be aware of them because you might need to link against them. For example, you might want to link against the Core Foundation framework. Since a framework is just one form of a bundle, you can do this by linking against /System/Library/Frameworks/CoreFoundation.framework with the -frameworkflag. A more thorough discussion of frameworks is in Mac Technology Overview.

For More Information

You can find additional information about bundles in Mac Technology Overview.

Handling Multiply Defined Symbols

Mac Dock For Linux

A multiply defined symbol error occurs if there are multiple definitions for any symbol in the object files that you are linking together. You can specify the following flags to modify the handling of multiply defined symbols under certain circumstances:

-multiply_defined treatmentSpecifies how multiply defined symbols in dynamic libraries should be treated when -twolevel_namespace is in effect. The values for treatment must be one of:

error—Treat multiply defined symbols as an error.warning—Treat multiply defined symbols as a warning.suppress—Ignore multiply defined symbols.

The default behavior is to treat multiply defined symbols in dynamic libraries as warnings when -twolevel_namespace is in effect.

-multiply_defined_unused treatmentSpecifies how unused multiply defined symbols in dynamic libraries should be treated when -twolevel_namespace is in effect. An unused multiply defined symbol is a symbol defined in the output that is also defined in one of the dynamic libraries, but in which but the symbol in the dynamic library is not used by any reference in the output. The values for treatment must be error, warning, or suppress. The default for unused multiply defined symbols is to suppress these messages.

Predefined Macros

The following macros are predefined in OS X:

__OBJC__This macro is defined when your code is being compiled by the Objective-C compiler. By default, this occurs when compiling a .m file or any header included by a .m file. You can force the Objective-C compiler to be used for a .c or .h file by passing the -ObjC or -ObjC++ flags.

__cplusplusThis macro is defined when your code is being compiled by the C++ compiler (either explicitly or by passing the -ObjC++ flag).

__ASSEMBLER__This macro is defined when compiling .s files.

__NATURAL_ALIGNMENT__This macro is defined on systems that use natural alignment. When using natural alignment, an int is aligned on sizeof(int) boundary, a short int is aligned on sizeof(short) boundary, and so on. It is defined by default when you're compiling code for PowerPC architecutres. It is not defined when you use the -malign-mac68k compiler switch, nor is it defined on Intel architectures.

__MACH__This macro is defined if Mach system calls are supported.

__APPLE__This macro is defined in any Apple computer.

__APPLE_CC__This macro is set to an integer that represents the version number ofthe compiler. This lets you distinguish, for example, between compilersbased on the same version of GCC, but with different bug fixes orfeatures. Larger values denote later compilers.

__BIG_ENDIAN__ and __LITTLE_ENDIAN__These macros tell whether the current architecture uses little endian or big endian byte ordering. For more information, see Compiling for Multiple CPU Architectures.

Note: To define a section of code to be compiled on OS X system, you should define a section using __APPLE__ with __MACH__ macros. The macro __UNIX__ is not defined in OS X.

Other Porting Tips

This section describes alternatives to certain commonly used APIs.

Headers

The following headers commonly found in UNIX, BSD, or Linux operating systems are either unavailable or are unsupported in OS X:

alloc.hThis file does not exist in OS X, but the functionality does exist. You should include stdlib.h instead. Alternatively, you can define the prototypes yourself as follows:

ftw.hThe ftw function traverses through the directory hierarchy and calls a function to get information about each file. However, there isn't a function similar to ftw in fts.h.

One alternative is to use fts_open, fts_children, and fts_close to implement such a file traversal. To do this, use the fts_open function to get a handle to the file hierarchy, use fts_read to get information on each file, and use fts_children to get a link to a list of structures containing information about files in a directory.

Alternatively, you can use opendir, readdir and closedir with recursion to achieve the same result.

For example, in order to get a description of each file located in /usr/include using fts.h, then the code would be as follows:

See the manual page for fts to understand the structures and macros used in the code. The sample code above shows a very simplistic file traversal. For instance, it does not consider possible subdirectories existing in the directory being searched.

getopt.hNot suported, use unistd.h instead.

lcrypt.hNot supported, use unistd.h instead.

malloc.hNot supported, use stdlib.h instead.

mm.hThis header is supported in Linux for memory mapping, but is not supported in Max OS X. In OS X, you can use mmap to map files into memory. If you wish to map devices, use the I/O Kit framework instead.

module.hKernel modules should be loaded using the KextManager API in the I/O Kit framework. For more information, see KextManagerLoadKextWithURL and related functions. The modules themselves must be compiled against the Kernel framework. For more information, see IOKit Fundamentals.

nl_types.hUse the CFBundleCopyLocalizedString API in Core Foundation for similar localization functionality.

ptms.hAlthough pseudo-TTYs are supported in OS X, this header is not. The implementation of pseudo-TTYs is very different from Linux. For more information, see the pty manual page.

stream.hThis header file is not present in OS X. For file streaming, use iostream.h.

stropts.hNot supported.

swapctl.hOS X does not support this header file. You can use the header file swap.h to implement swap functionality. The swap.h header file contains many functions that can be used for swap tuning.

termio.hThis header file is obsolete, and has been replaced by termios.h, which is part of the POSIX standard. These two header files are very similar. However, the termios.h does not include the same header files as termio.h. Thus, you should be sure to look directly at the termios.h header to make sure it includes everything your application needs.

utmp.hDeprecated, use utmpx.h instead.

values.hNot supported, use limits.h instead.

wchar.hAlthough this functionality is available, you should generally use the CFStringRef API in Core Foundation instead.

Functions

The following functions commonly seen in other Unix, Linux, or BSD operating systems are not supported or are discouraged in OS X.

Ubuntu Linux For Mac

btowc, wctobAlthough OS X supports the wchar API, the preferred way to work with international strings is the CFStringRef API, which is part of the Core Foundation framework. Some of the APIs available in Core Foundation are CFStringGetSystemEncoding, CFStringCreateCopy, CFStringCreateMutable, and so on. See the Core Foundation documentation for a complete list of supported APIs.

catopen, catgets, catclosenl_types.h is not supported, thus, these functions are not supported. These functions gives access to localized strings. There is no direct equivalent in OS X. In general, you should use Core Foundation to access localized resources (CFBundleCopyLocalizedString, for example).

cryptThe crypt function performs password encryption, based on the NBS Data Encryption Standard (DES). Additional code has been added to deter key search attempts.crypt. OS X's version of the function crypt behaves very similarly to the Linux version, except that it encrypts a 64-bit constant rather than a 128-bit constant. This function is located in the unistd.h header file rather than lcrypt.h.

Note: The linker flag -lcrypt is not supported in OS X.

dysizeThis function is not supported in OS X. Its calculation for leap year is based on:

You can either use this code to implement this functionality, or you can use any of the existing APIs in time.h to do something similar.

ecvt, fcvtDiscouraged in OS X. Use sprintf, snprintf, and similar functions instead.

fcloseallThis function is an extension to fclose. Although OS X supports fclose, fcloseall is not supported. You can use fclose to implement fcloseall by storing the file pointers in an array and iterating through the array.

getmntent, setmntent, addmntent, endmntent, hasmntoptIn general, volumes in OS X are not in /etc/fstab. However, to the extent that they are, you can get similar functionality from getfsent and related functions.

pollThis API is partially supported in OS X. It does not support polling devices.

sbrk, brkThe brk and sbrk functions are historical curiosities left over from earlier days before the advent of virtual memory management. Although they are present on the system, they are not recommended.

shmgetThis API is supported but is not recommended. shmget has a limited memory blocks allocation. When several applications use shmget, this limit may change and cause problems for the other applications. In general, you should either use mmap for mapping files into memory or use the POSIX shm_open function and related functions for creating non-file-backed shared memory.

swapon, swapoffThese functions are not supported in OS X.

Utilities

The chapter Designing Scripts for Cross-Platform Deployment in Shell Scripting Primer describes a number of cross-platform compatibility issues that you may run into with command-line utilities that are commonly scripted.

This section lists several commands that are primarily of interest to people porting compiled applications and drivers, rather than general-purpose scripting.

lddThe ldd command is not available in OS X. However, you can use the command otool -L to get the same functionality that ldd provides. The otool command displays specified parts of object files or libraries. The option -L displays the name and version numbers of the shared libraries that an object file uses. To see all the existing options, see the manual page for otool.

lsmodlsmod is not available on OS X, but other commands exist that offer similar functionality. The

kextutilLoads, diagnoses problems with, and generates symbols for kernel extensions.

kextstatPrints statistics about currently loaded drivers and other kernel extensions.

kextloadLoads the kernel module for a device driver or other kernel extensions. This command is a basic command intended for use in scripts. For developer purposes, use kextutil instead.

kmodunloadUnloads the kernel module for a device driver or other kernel extensions. This command is a basic command intended for use in scripts. For developer purposes, use kextutil instead.

For more information about loading kernel modules, see Kernel Extension Programming Topics.

Copyright © 2002, 2012 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2012-06-11