- Httrack Website Copier For Mac Os

- Httrack Website Copier For Mac Download

- Httrack Website Copier For Mac Downloads

Httrack Website Copier For Mac Os

Best Free Website Copier: HTTrack. Completely free. Available for most versions of Windows. This icon library includes popular website icons. XFreesoft Mac DVD Backup Copier v.2.3.0.5 With easy-to-use interface and powerful DVD copy ability, XFreesoft Mac DVD Backup Copier can remove DVD copy protections including DVD region code, CSS, UOPS, ArccOS, etc. HTTrack's flagship product is HTTrack-an easy-to-use offline browser utility. Web development occurs in different environments and has to be tested on multiple platforms. So a website ripper that fits all platforms will be a boon for the developers. Scrapbook, Web copy, Mozilla achieve format, HTTrack etc., are some of the excellent tools that are compatible with multiple platforms. Site Sucker for Mac. Cyotek WebCopy is a free tool for automatically downloading the content of a website onto your local device. WebCopy will scan the specified website and download its content. Links to resources such as style-sheets, images, and other pages in the website will automatically be remapped to match the local path. Using its extensive configuration you can define which parts of a website will be.

Sometimes you need to download the whole web site for offline reading. Maybe your internet doesn’t work and you want to save the websites or you just came across something for later reference. No matter the reason is, you need a website ripper software for you to download or get the partial or full website locally onto your hard drive for offline access.

It’s easy to get the updated content from a website in real-time with an RSS feed. However, there is another way would help you to get your favorite content at hand faster. A website ripper enables you to download an entire website and save it to your hard drive for browsing without any internet connection. There are three essential structures - sequences, hierarchies, and webs that used to build a website. These structures would decide how the information is displayed and organized. Below is the list of the 10 best website ripper software in 2020. The list is based on ease of use, popularity, and functionality.

Httrack Website Copier For Mac Download

1. Octoparse

Octoparse is a simple and intuitive web crawler for data extraction without coding. It can be used on both Windows and Mac OS systems, which suits the needs for web scraping on multiple types of devices. Whether you are a first-time self-starter, experienced expert or a business owner, it will satisfy your needs with its enterprise-class service.

To eliminate the difficulties of setting up and using, Octoparse adds 'Web Scraping Templates' covering over 30 websites for starters to get comfortable with the software. They allow users to capture the data without task configuration. For seasoned pros, 'Advanced Mode' helps you customize a crawler within seconds with its smart auto-detection feature. With Octoparse, you are able to extract Enterprise volume data within minutes. Besides, you can set up Scheduled Cloud Extraction which enables you to obtain dynamic data in real-time and keep a tracking record.

Website: https://www.octoparse.com/download

Minimum Requirements

Windows 10, 8, 7, XP, Mac OS

Microsoft .NET Framework 3.5 SP1

56MB of available hard disk space

HTTrack is a very simple yet powerful website ripper freeware. It can download the entire website from the Internet to your PC. Start with Wizard, follow through the settings. You can decide the number of connections concurrently while downloading webpages under the “set option.” You are able to get the photos, files, HTML code from the entire directories, update current mirrored website and resume interrupted downloads.

The downside of it is that it can not use to download a single page of the website. Instead, it will download the entire root of the website. In addition, it takes a while to manually exclude the file types if you just want to download particular ones.

Website: http://www.httrack.com/

Minimum Requirements

Windows 10, 8.1, 8, 7, Vista SP2

Microsoft .NET Framework 4.6

20MB of available hard disk space

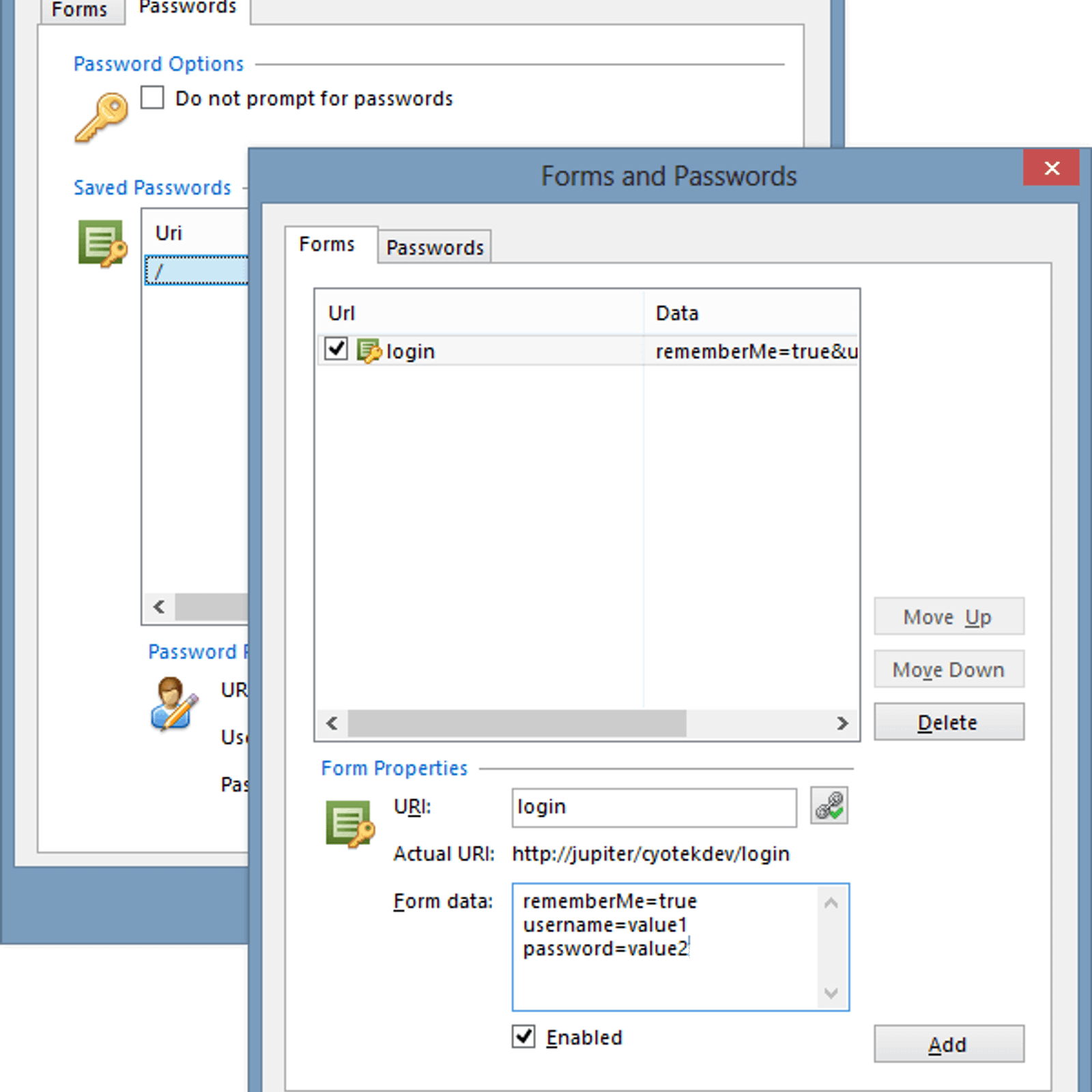

WebCopy is a website ripper copier that allows you to copy partial or full websites locally for offline reading. It will examine the structure of websites as well as the linked resources including style sheets, images, videoes and more. And this linked resource will automatically remap to match its local path.

The downside of it is that Cyotek WebCopy can’t parse/crawl/scrape websites that apply Javascript or any with dynamic functions. It can’t scrape raw source code of the website but only what it displays on the browser.

Website: https://www.cyotek.com/cyotek-webcopy/downloads

Minimum Requirements

Windows, Linux, Mac OSX

Httrack Website Copier For Mac Downloads

Microsoft .NET Framework 4.6

3.76 MB of available hard disk space

4. Getleft

Getleft is a free and easy-to-use website grabber that can be used to rip a website. It downloads an entire website with its easy-to-use interface and multiple options. After you launch the Getleft, you can enter a URL and choose the files that should be downloaded before begin downloading the website.

Website: https://sourceforge.net/projects/getleftdown/

Minimum Requirements

Windows

2.5 MB of available hard disk space

Artículo en español: 4 Mejores Extractores de Sitios Web Fáciles de Usar

También puede leer artículos de web scraping en El Website Oficial

Cyotek WebCopy is a free tool for automatically downloading the content of a website onto your local device.

WebCopy will scan the specified website and download its content. Links to resources such as style-sheets, images, and other pages in the website will automatically be remapped to match the local path. Using its extensive configuration you can define which parts of a website will be copied and how, for example you could make a complete copy of a static website for offline browsing, or download all images or other resources.

What can WebCopy do?

WebCopy will examine the HTML mark-up of a website and attempt to discover all linked resources such as other pages, images, videos, file downloads - anything and everything. It will download all of theses resources, and continue to search for more. In this manner, WebCopy can 'crawl' an entire website and download everything it sees in an effort to create a reasonable facsimile of the source website.

WebCopy does not include a virtual DOM or any form of JavaScript parsing. If a website makes heavy use of JavaScript to operate, it is unlikely WebCopy will be able to make a true copy if it is unable to discover all of the website due to JavaScript being used to dynamically generate links.

WebCopy does not download the raw source code of a web site, it can only download what the HTTP server returns. While it will do its best to create an offline copy of a website, advanced data driven websites may not work as expected once they have been copied.